Inclusive design and development meetup

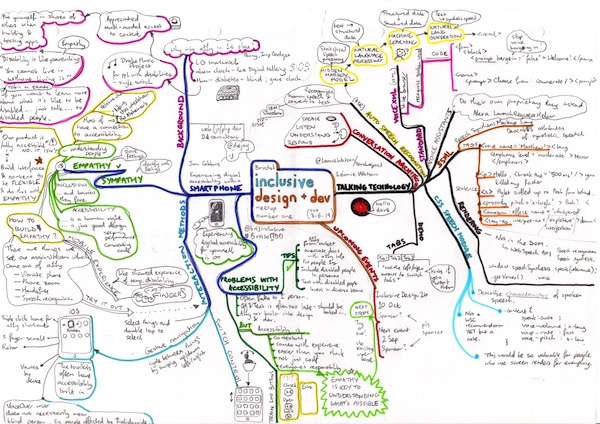

Mind map and text notes from the first Bristol inclusive design and development meetup on 3rd June 2019 with two great talks: Experiencing digital accessibility through a smartphone by Jon Gibbins, and Talking Technology by Léonie Watson.

Jon Gibbins - experiencing digital accessibility with a smartphone

Background

We all have a connection to accessibility - most of us know someone with a disability.

“If you want to learn more about what it’s like to be disabled… just talk… to disabled people” - Robin M Eames.

Sympathy v empathy - not feeling sorry for someone, but sharing their feelings.

We can never say “our product is fully accessible”. It isn’t.

Instead, we build interfaces and content to be flexible and we do this with empathy.

Accessibility is… a human right. Just good design. Security. Performance. Commenting code.

Build empathy by experiencing digital accessibility for yourself.

Interaction methods

iOS and other toolkits have lots of accessibility built in.

Web rotor - cycle through headings, forms, links…

Swipe up/down to move between each one.

3 finger scroll

Switch control - move head left or right to select an area of the screen and an icon.

Problems with accessibility and tips

It often falls to one person in the team.

QA and test is often too late - should be baked into design and development from the outset.

But: accessibility is easier than you think and is everyone’s responsibility.

Include disabled people in personas and test with disabled people.

Empathy is key to understanding.

Léonie Watson - Talking Technology

Background

Conversation architecture: speak, listen, understand, respond. IBM Shoebox.

Automatic speech recognition includes:

Hidden Markov model, natural language processing, machine learning, natural language generation

Voice XML

A standard that looks a bit like HTML.

<form><block><prompt bargein=“false”>Welcome!</prompt></block></form>

Voice assistants like Alexa use their own proprietary language.

Speech Synthesis Markup Language

Also looks like HTML.

Voice: <voice name=“Matthew”><lang= …

Paragraph: <p>Hello, <break time=‘500ms’/>you killed my father</p>

Sentence: <s>Piglet sidled up to Pooh.</s>

Variations: <prosody pitch=‘x-high’>Pooh?

Effects: <amazon: effect name=‘whispered’>

Interpretations: <say-as interpret-as=‘expletive’> … may beep it out.

SSML does not render in the DOM but uses Web Speech API.

CSS Speech Module

Describes characteristics of spoken speech.

Not a W3C recommendation YET.

.content {

speak: auto;

voice-volume: x-loud;

voice-rate: fast;

voice-pitch: x-low;

}

This standard would be so valuable for people who use screen readers for everything.